Until humans learn to respect the decisions made by robots, their utility will be limited, writes Kurt Gray

The radar told its own story. A major storm was heading our way. While I was still at college, I did some work for a geological surveying company, searching for natural gas supplies in the northern Canadian wilderness. Many of the sites we worked on could be accessed only by helicopter. The weather was intensely cold even when it was dry. In winter, in a snowstorm, it was downright dangerous.

The radio flickered. It was our chopper pilot with bad news. The wind and snow would make flying the helicopter perilous, he told us. Ian, our crew chief, had a choice: fly back to base with high winds and low visibility, or stay in the bush overnight with neither food nor shelter. He opted for the latter. We waited until morning for the storm to pass, huddling together around a small fire in the cold darkness. Yet at no point did I blame Ian for his decision. I trusted him implicitly that the alternative – the flight – could have been a whole lot worse.

Now imagine the same scenario 25 years in the future. Would Ian – a living, breathing human being be making that call? Quite possibly not. Risk assessment, particularly when the risks are directly connected to measurable, predictable factors such as short-term meteorological conditions, is being rapidly farmed out to robots. I wonder if I would have trusted “Ianbot” to make the same decision? Despite the fact that it would have weighed up the odds of death by freezing versus death by flying and come to a similar conclusion, I would probably have rejected its decision. Why? Humans seldom trust machines.

The empathy factor

The evidence, including my own research, shows that humans are unwilling to trust robots – with their lives or otherwise. This lack of faith in AI has potentially huge consequences for the next few decades. Like an ostensibly competent employee whom, for whatever reason, you do not trust, they are of limited utility. We risk investing billions to make robots with incredible processing power, but to whom we give only the most mundane of tasks.

The problem comes from empathy, or perceived lack of it. My research shows that humans are almost universally disinclined to credit machines with any notion of ‘feeling’. The calculations made by Ian and Ianbot would be largely similar – assessing the probability of tragedy via exposure versus a snowbound chopper flight. Yet because Ian can feel for my team’s plight and Ianbot cannot, we are much keener to take our chances with the human.

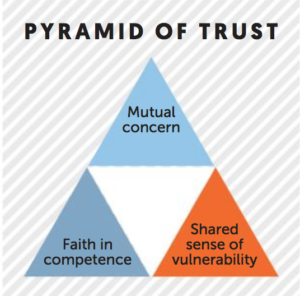

To be a trusted team member, you need at least three qualities: mutual concern, a shared sense of vulnerability, and faith in competence. Of these three crucial factors, mutual concern is the most fundamental – unless you are convinced your boss, colleague or supplier cares for your own wellbeing, you are unlikely to trust him (see graphic below left). Humans know that robots – currently at least – don’t genuinely feel that concern (although they may express it), so trust is hard to come by.

Partly to gain trust, humans act irrationally – a leader of a regiment might offer to take on a very risky solo mission himself, for example. In a more mundane business setting, a senior manager might deliberately pick up a meeting with an unhappy, volatile client despite the fact that doing so would leave her exposed to criticism and perhaps incur damage to her reputation. In the patois of business life, we call it ‘taking one for the team’.

This ultra-human strategy works as a powerful facilitator of trust because it taps into the second point of our pyramid – a shared sense of vulnerability. The manager who meets the volatile client is vulnerable. She might be reported by the client to her superiors; she might even lose her job. Because her team members know this, they are more like to credit her with their trust. A machine, which cannot feel the dismay of censure or rejection, has nothing to lose by taking such a risk.

Fickle masters

Yet despite our misgivings about machines, we have a strange predilection for believing them to be omnipotent – at least in the short-term. Handed a new technology, humans tend at first to think it can do everything: they have an overblown faith in its competency.

Sadly, this faith is as fragile as it is exaggerated. It seems that as soon as the technology makes one key mistake, its human masters are prone to abandoning it altogether. The technology rapidly descends from being perceived as being able to do everything, to being able to do nothing. Whereas managers are often sanguine about their human employees making some mistakes as long as they learn from them, they rarely afford such courtesy to AI. The utility of robots will be limited until we learn to trust them, and that is a long way off. Until robots can prove to us that they can feel, they will remain outside our circle.

Yet AI remains hugely useful. Have I changed my view about the fictional Ianbot making a life or death call? Not really. I would still prefer a human being to make the final decision. But I recognize that AI – while limited – is powerful. Had we benefited in those days from meteorological algorithms capable of forecasting snowstorms several days out, I probably wouldn’t have travelled there in the first place.